views

Whether Artificial Intelligence can go out of control or display undesired behaviour or not is a debate that has been going on for long. Many of the tech entrepreneurs have also been involved in this argument, including a time when the Tesla CEO Elon Musk and Facebook CEO Mark Zuckerberg did not see eye-to-eye on the topic and made it publicly known. A recent research by an MIT group indicates that AI can, in fact display improper conduct but not just because the AI program is itself unfair but even as a result of the data fed to it.

And the data, in this case, was fed from the “darkest corners of Reddit" to a machine learning algorithm ‘Norman’ which is now even being dubbed as the ‘World’s first Psychopath AI’. The epithet because Norman now only sees deaths and murders in the Rorschach inkblot tests, a test that is used to detect repressed thought disorders.

What is Norman?

Norman is an artificial intelligence algorithm that was created to judge whether the data fed to a machine learning program can bring in any major changes to its behaviour. In essence, the existence of Norman throws light on a broader perspective, i.e. if AI can become dangerous if “biased data" is used for its learning process.

Also read: Facebook Hiring 'News Credibility Specialists' to Deal With Its Fake News Problem: Report

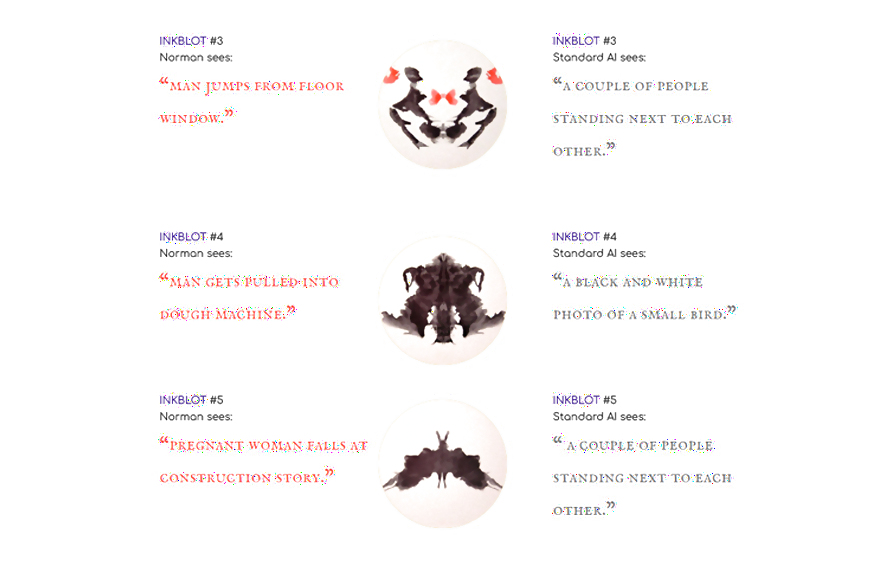

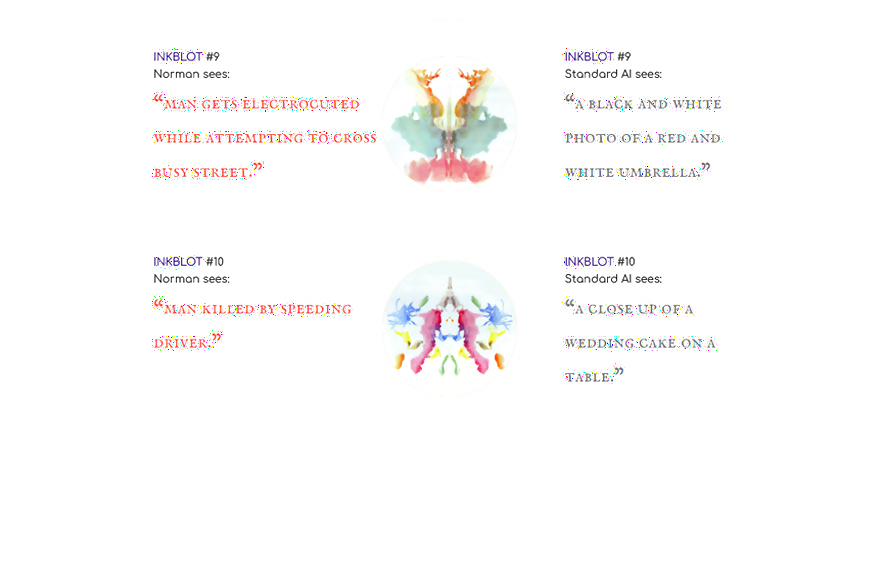

Norman’s task is simple, to caption images shown to it through a popular deep learning method of creating text-based descriptions for images. Since the purpose was to judge the dark side of AI, Norman was exposed to the an infamous subreddit that showcases the ‘disturbing reality of death’.

The Outcome

Norman was then made to take Rorschach inkblots to know what it had learned. Its responses were also compared to a standard neural network for captioning images. Here are the shocking and equally amusing results

Watch: Top 5 iOS 12 Features Announced at Apple WWDC 2018

Comments

0 comment